Introduction to the survey

The survey proceeded with tablet computers, and respondents answered the questions by themselves. During the one-day event, KITE project researchers managed to gather all in 101 survey responses. Respondents represent a varied group from school kids to seniors with an age range from 9 to 73 years and thus the insight is very interesting. However, the data collection is still limited: the event was a science event focusing on a smart city solution that might attract similar kinds of people, thus narrowing down the diversity of respondents. Despite this, the survey reveals interesting notions of how (smart city) citizens perceive AI, and what are their real-life experiences, needs, and concerns related to AI technologies. All questions were voluntary, and some questions allowed multiple choices. This has taken into an account in the analysis.

AI is seen as understandable and acceptable

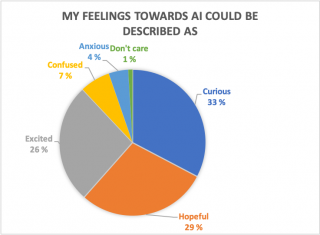

Most respondents found AI as a familiar concept: 94% of the respondents agreed AI be a familiar concept. In addition, 88 % of the respondents described themselves as either active, mainstream, or a forerunner follower in technology development. The dystopian horror stories that are typical around the public AI discourse, were not visible in this survey and the feelings of AI were mostly positive. Many respondents described themselves as curious, hopeful, or excited about AI. This is a positive sign in smart city development because it indicates AI acceptance among the citizens: the communication of AI development to the public and how the public perceives this information will critically influence the technology adoption and use (Cave et al. 2018). However, puzzling feelings, such as confusion, were also visible in the survey responses.

Who decides about the use of AI?

One of the most important elements of human-centered AI is user awareness. When users know they are interacting with the AI system, they can make judgments about the advantages and limitations of the system and choose whether to work with it or not (Engler 2020). However, humans are not always aware of being “users” of AI, and hence human-centered AI must be transparent especially in situations that are critical regarding the consequences.

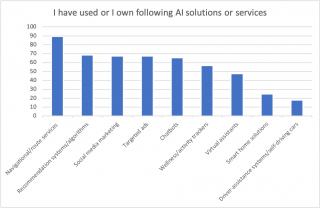

According to our survey, the most familiar AI technology are navigational services. This does not come as a surprise since navigational services are typically built-in solutions, for instance, in smartphones. The use of these services is easy and straightforward, and users can independently choose if and when to use this tool. Nonetheless, it is interesting that navigational services are followed by recommendation algorithms or social media marketing: AI solutions that are beyond the users’ scope and concrete use. Of course, in most cases, users can tweak e.g. the ads or recommendations but they are still heavily influenced by AI algorithms that gather personal data and track user behavior. Even though automation is one of the driving forces of AI development, maintaining user control is an important aspect. According to Balaram et al. (2018), people would be more likely to support AI applications if they were given more agency.

The concern of losing humanness vs. the benefit to save time

AI solutions are typically promoted as a weapon against time — the time-saving tool that removes the mundane tasks and releases human capacity to its full potential. This thought was visible also in the survey: 79% of the respondents saw time-saving features as the main expected AI benefit, and 75% assumed that AI will improve work efficiency. On the other hand, the expected threats or concerns towards AI associated with the decrease of humanness (67%) and loss of human contacts (65%), in addition to the data collection, privacy, and security (65%).

It is noteworthy, that technological change is always a trade-off: the benefit that the new technology brings includes also a negative side-effect (Postman 1998). For example, anthropomorphism might create problems in AI transparency: customer service calls, chatbots, and interactions on social media and in virtual reality may become progressively less evidently artificial (Engler 2020). People still need people, and it is important to provide ways, for instance, to human-human communication. In addition, we need to keep ‘human-in-the-loop’. While automation has the potential to make us more human by taking off the repetitive tasks, it will require us to be more critical and reflect on our practice to find where our human intelligence will be necessary (Hume, 2018).

Building better societies and well-being with AI

Human-centric design and human-centered AI do not highlight ‘human’ without a reason: respecting human values and needs while designing AI applications is a core element of socially acceptable and responsible AI. It is important to notify, what kind of solutions are usable in certain contexts, and how these solutions serve specific user needs. In addition, AI should be designed in taking into account the wider sociocultural context and sociocultural norms (Ford et al. 2015).

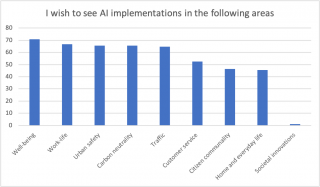

In our survey, the respondents wish to see AI solutions specifically in societally remarkable areas such as well-being, work-life, safety, and carbon-neutral buildings. Of course, these categories are very wide and for example, ‘well-being’ can be interpreted in many ways (personal well-being, e.g. personal health, or societal well-being, e.g. education). Unfortunately, we could not dive very deep into the topic. However, most of the answers can be seen to benefit both the individual and the society, and for instance, AI usage in the home and everyday life was ranked relatively low. It seems, that AI solutions are expected to be realized mainly on a societal level.

We hear you – KITE project focusing on human-centered AI

The survey responses presented in this blog post were gathered during a one-day event with a very varied group of respondents, so the results are not very generalizable. However, the results provide interesting information and insight into the citizens’ perceptions of AI and smart city solutions. Even though the feelings toward AI are mainly positive and AI is seen as beneficial, for instance in improving work efficiency, the concerns of losing humanness are visible. Thus, it is important to design AI solutions considering all stakeholders, including those who are influenced by AI without directly using it. This is critical as AI’s agency in society increases.

KITE project (Kaupunkiseudun ihmiskeskeiset tekoälyratkaisut) aims to develop human-centric AI solutions and their design methods with companies utilizing applied research on human-centered technology. The purpose is to create knowledge and skills in the design and development of AI solutions that are meaningful, understandable, acceptable, and ethically sustainable for their users.

If you or your company are interested in the topic or wish to collaborate within the project, feel free to contact Ritva Savonsaari, ritva.savonsaari(at)tuni.fi, so we can discuss further possible collaboration.

References

Balaram, B., Greenham, T., & Leonard, J. (2018). Artificial Intelligence: real public engagement. RSA, London. Retrieved November 5, 2018 from https://www.thersa.org/globalassets/pdfs/reports/rsa_artificial-intelligence—real-public-engagement.pdf

Cave, S., Craig, C., Dihal, K., Dillon, S., Montgomery, J., Singler, B., & Taylor, L. (2018). Portrayals and Perceptions of AI and Why They Matter. The Royal Society, London. Retrieved from https://royalsociety.org/-/media/policy/projects/ai-narratives/AI-narratives-workshop-findings.pdf

Engler, A. (2020) The case for AI transparency requirements. Brookings Institution’s Artificial Intelligence. The Brookings Institution’s Artificial Intelligence and Emerging Technology (AIET) Initiative. Retrieved from https://www.brookings.edu/research/the-case-for-ai-transparency-requirements/

Ford, K. M., Hayes, P. J., Glymour, C., & Allen, J. (2015). Cognitive Orthoses: Toward Human-Centered AI. AI Magazine, 36(4), 5-8. https://doi.org/10.1609/aimag.v36i4.2629

Hume, K. (2018). When Is It Important for an Algorithm to Explain Itself? Harvard Business Review. Retrieved from https://hbr.org/2018/07/when-is-it-important-for-an-algorithm-to-explain-itself