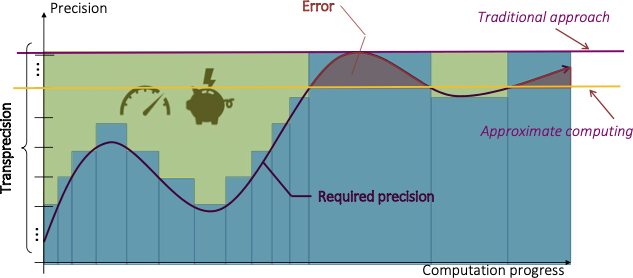

Transprecision computing is a groundbreaking approach that has emerged to enhance computational efficiency. As depicted in Figure, in traditional methods, computations typically use the maximum level of precision throughout the process. However, using the highest precision in floating-point arithmetic all the time can result in increased power consumption and extended computation times, a concern for applications where energy efficiency and speed are paramount. Programs typically perform many stages of computations to produce a result, and different stages might require different levels of precision, depending on their specific needs. Therefore, selecting the right precision for each stage is essential for optimizing the program’s performance and efficiency. One of the approaches adopted to improve efficiency is Approximate Computing (AC), which is beneficial for applications that can handle some level of inexactness in their outputs. AC might involve using a single data type throughout the computation process in some scenarios, regardless of the specific requirements of different stages. While this can decrease computational cost, it might introduce errors, especially in the computation stages requiring higher precision.

This is where transprecision computing comes into play. It allows programmers to use different precision levels for different program parts, striking a balance between accuracy and efficiency. By adopting transprecision computing, programmers can reduce computational costs while maintaining necessary precision and accuracy. This optimization enhances the program’s performance, making transprecision computing a promising advancement in pursuing computational efficiency. This innovative approach is especially valuable in data analysis, robotics, and the IoT, where numerous computations are carried out continuously, and energy efficiency is crucial.

Nowadays, most computing systems adopt 32- and 64-bits FP formats, known as single-precision and double-precision formats. Using smaller-than-32-bit FP formats (such as float16, bfloat16, and float8 types) has become increasingly important in modern computing systems that require precision and the ability to handle a broad range of numbers. The benefits of using smallFloats, particularly in edge devices, is leading to significant energy savings. The energy savings occur due to simplifying the mathematical circuitry involved and decreasing the memory bandwidth demands for data transfer between storage and processing units.

This paper presents TransLib, a comprehensive open-source library encompassing eight kernels, intended as foundational components for applications built on the principles of transprecision computing. The library comprises a tunable golden model scripted in Python alongside a C implementation specifically optimized for use on IoT end-nodes for each kernel. Designed with portability in mind, TransLib can easily be adapted to various architectures. As a case study, we utilized the PULP target to assess the accuracy and validate the performance of the kernels in different scenarios, including multi-core and vectorization variants.

The paper is available at: https://zenodo.org/record/8159377

IEEE print is available at: https://ieeexplore.ieee.org/document/10136916