Deep learning models are growing exponentially in size, demanding immense computational power and energy. With AI expected to consume more energy than we can produce by 2040, finding efficient ways to run these models is crucial.

In a recently defended PhD thesis, Piotr Kluska, tackled this challenge by developing new compression techniques for Vision Transformer (ViT) architectures. By leveraging post-training quantization and a novel Hybrid Quantization (HQ) method, the research demonstrated how ViT models can retain high accuracy while significantly reducing memory and energy consumption.

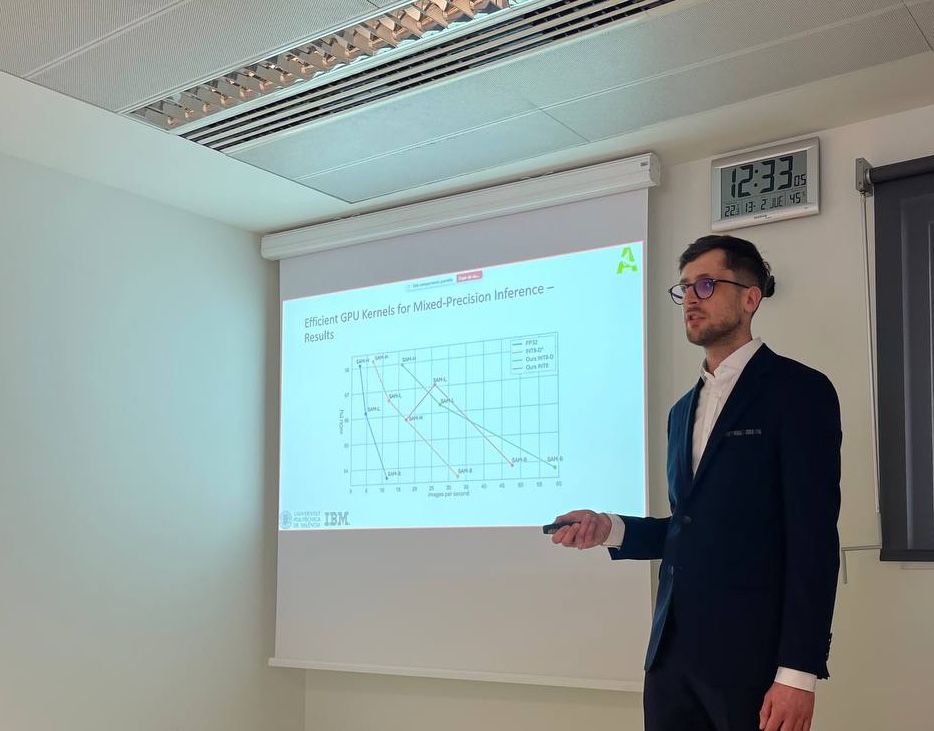

The thesis also introduced QAttn, an open-source framework that integrates optimized quantized GPU kernels into PyTorch. This innovation led to up to 7.34x performance improvements, paving the way for more sustainable deep learning applications.

This work not only enhances efficiency in ViT models but also sets the stage for future AI research focused on reducing computational costs without sacrificing performance.

Read Piotr’s thesis here: https://zenodo.org/records/15043962