Connected vehicular services are becoming integral to modern transportation systems, relying heavily on high volume data processing using AI models deployment on high-performance embedded devices and reliable communication for efficient operation. Ensuring energy efficiency from the high volume data processing within connected vehicles, energy-efficient computing and communication of these operations can be considered as an optimization problem. To address this challenge, this study delves into software approximation and edge AI approaches for energy saving in vehicular services. Focusing on data-intensive vehicular tasks, we explore an empirical case study cantered on high-definition (HD) maps using approximating convolution and model partition. We further explore AI model energy consumption across varying approximation ratios on embedded edge devices. Drawing insights from model deployment, we also envision an approximate Edge AI pipeline, setting the stage for the development and deployment of energy-efficient vehicular services.

Methods and Results:

The study examines the impact of approximate convolution operations on BiSeNet architecture, primarily used for segmentation tasks in vision applications.

- The research adopts the BiSeNet architecture for semantic modeling, specifically focusing on the convolutional layer. Convolutional layers are computationally intensive, making them an apt target for approximation. An approximation function is implemented to compress the baseline architecture, contributing to energy-efficient processing.

- Model partition involves splitting the model for vision-based applications, enabling parallelization of operations. By partitioning input and output tensors, deep neural network operations can be executed concurrently, facilitating the deployment of models on resource-constrained devices.

Results and Discussion

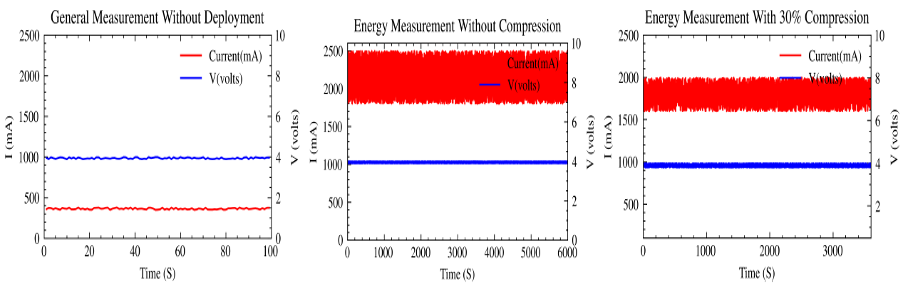

Experimental results underscore the potential of AI model approximation in enhancing energy efficiency by targeting the convolution operation. By approximating the convolutional layer, we observed a reduction in model size and energy consumption. A trade-off analysis revealed that a modest approximation of 10-35% could be employed to strike a balance between energy saving and model performance parameter such as accuracy.

Conclusion

In this study, we investigated the utility of AI model approximation and model partitioning for achieving energy-efficient connected vehicular services. The research sheds light on the promising use of approximation techniques, particularly within convolutional layers, to reduce energy consumption by partially trading accuracy numbers. This work provides a background for the future exploration of extensive compute demanding layers/models, such as approximation of fully connected layers and a holistic approach to deploy energy-efficient or energy-aware AI models using balanced trade-off between metrics. By addressing the optimization on the performance metrics, we can optimize the AI models and respective services to be deployed.

The paper is available at: https://zenodo.org/record/7981282

IEEE print is available at: https://ieeexplore.ieee.org/document/10089724