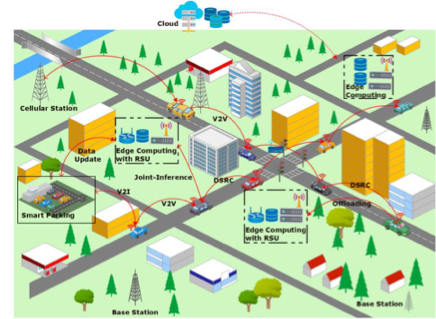

Deployment of artificial intelligence (AI) algorithms offers automated solutions by influencing decision-making processes. Currently proposed method includes deployment of these AI models in federated or distributed setting on edge devices for collaborative learning and joint inference. However, these AI models can sometimes exhibit biased outputs especially in case of visual application while dealing with familiar groups or identical sets of classes. The occurrence of biases in an AI models can lead to inaccurate classifications, predictions, and even denial of services, impacting protected or less represented classes within a dataset. This paper explores state-of-the-art object detection and classification models using metrics such as selectivity score and cosine similarity over driving dataset. The focus is on perception tasks for vehicular edge scenarios, with the aim of developing unbiased AI models for future vehicular edge services.

Methods and Results:

The study examines the impact of data diversity and instances included in the test and validation sets on the generalization of neural networks. Popular AI models (ResNet18, SqueezeNet, DenseNet and BiraNet) are trained and evaluated using the nuScenes and biased car dataset. The experiments involve analyzing the selectivity score, accuracy, and minimum average precision metrics while varying the data diversity. The results indicate that as data diversity increases, the performance of the neural networks may decline, affecting accuracy. The selectivity score of neurons also reflects the challenges faced by the models in learning from diverse and imbalanced datasets. Furthermore we use cosine similarity analysis to demonstrate the models’ performance ability on unseen samples.

To assess the models’ performance on the nuScenes dataset, which includes different weather conditions, the study measures average precision for car and pedestrian class. These classes are highly represented in the nuScenes datasets. The results show variations in performance depending on the weather conditions, indicating the need to consider data diversity during model training.

Conclusion:

This research emphasizes the importance of addressing biases in AI models used for object detection and classification, especially in real-world applications like autonomous driving, where inference can be affected with the presence of biased ground truth samples. Analysis from this paper highlight the significance of data diversity and the impact it has on model performance. By measuring selectivity score, cosine similarity, and average precision, it becomes possible to identify biases and work towards developing unbiased AI models.

To mitigate biases in AI models, it is crucial to train models on diverse datasets and consider hybrid learning approaches that combine supervised and active learning. Additionally, efficient communication and computation in edge-AI and federated learning environments can help prevent bias inheritance from ground truth labels.

Overall, this study contributes to the understanding of bias in AI models and provides insights into developing unbiased AI models for future vehicular edge services. By addressing biases, we can enhance the fairness and accuracy of AI systems, enabling more reliable and equitable decision-making processes in various domains.

The paper is available at: https://zenodo.org/record/7541438

IEEE print is available at: https://ieeexplore.ieee.org/document/9996662