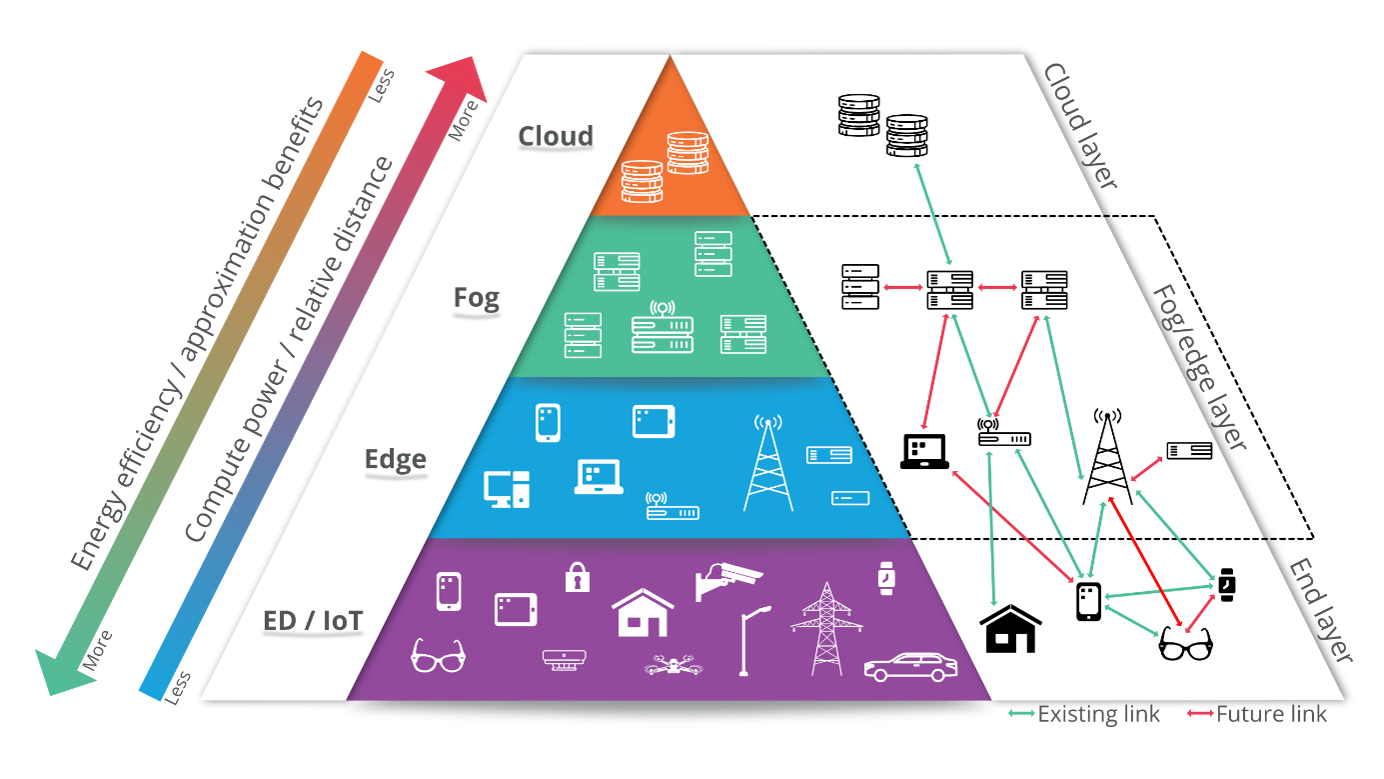

The widespread popularity of Internet of Things (IoT) and smartphones has greatly increased the amount of data produced. Until now, these data have been transferred to and stored and processed in the Cloud – large, centralized datacenters – as the data-generating devices typically have limited energy and computation resources. Examples of these devices and their connections are shown in Fig. 1. Yet, as more devices are connected to the Internet, contention for network resources renders this solution impractical. Fortunately, developments in integrated circuits and computer architecture mean the connected devices have become more powerful, and in parallel, applications consuming the data have changed to ones that can provide acceptable results despite performing some computations erroneously. The Edge computing and Approximate Computing (AxC) paradigms exploit these trends to reduce the applications’ energy consumption to carry them out nearer to the end user without relying on the Cloud.

Figure 1 Examples of devices and their current and projected connections in each computing paradigm.

Also indicated is the expected decrease in overall system energy as more data is processed near the Edge, meaning it no longer needs routing through many network layers.

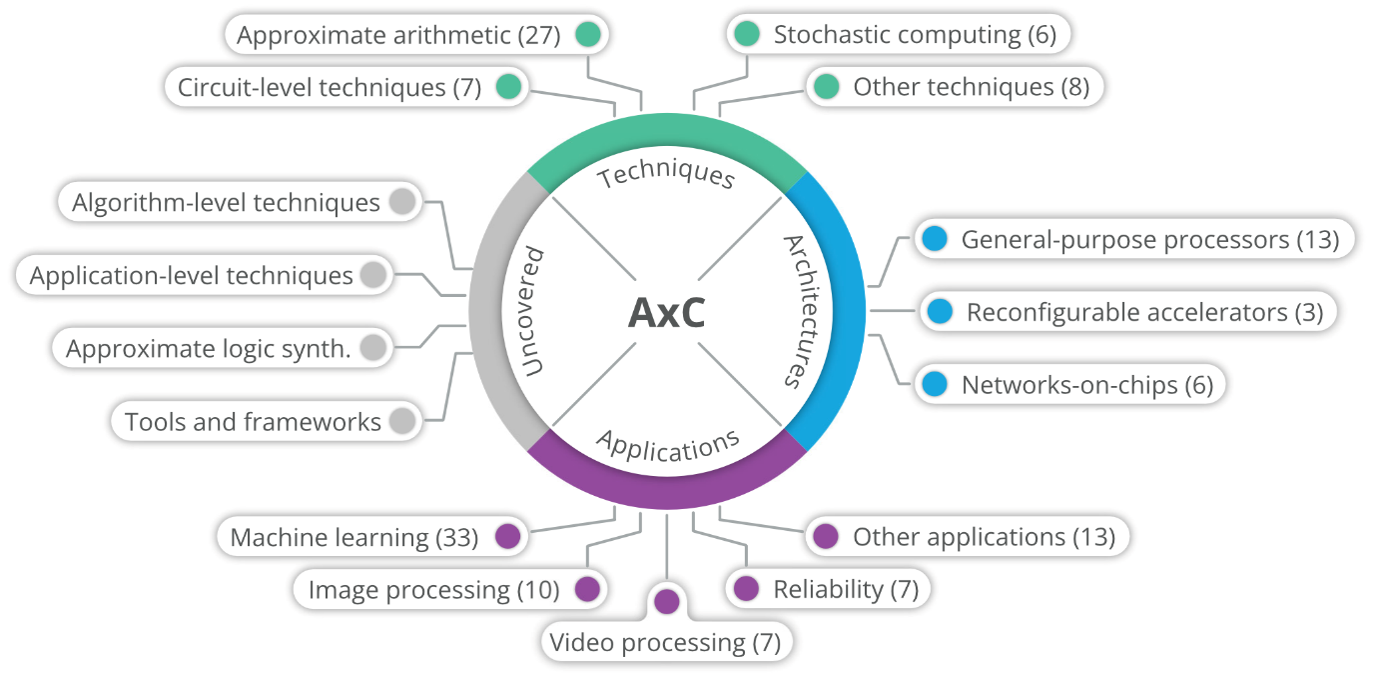

In our review, we focus on these paradigms’ intersection, surveying state-of-the-art implementations of AxC in circuits or hardware architectures and Edge-related applications that can benefit from it. We have carried out our review as systematically as possible to ensure its reproducibility and cover the categories displayed in Fig. 2 below, where the number of papers in each category is parenthesized.

Figure 2 Overview of the topics covered in the review and the number of papers in each category.

At the circuit-level, approximations are mostly introduced to reduce power consumption or chip area. They change circuitry to function inaccurately by operating it at sub-nominal voltage, over-scaled clock frequency, or through deliberate design changes. One particularly popular branch of research in this direction focuses on arithmetic modules – adders, multipliers, etc. – that are costly in terms of area; another focuses on memories and inexact reuse of previously obtained results. Both these techniques find application in general-purpose computing architectures for which researchers also have proposed replacing complex functions with light-weight Neural Networks (NNs) and ways to reduce communication in multi-core systems.

As Fig. 2 reveals, AxC is commonly applied to Machine Learning (ML). It is well-known that NNs are resilient toward reduced numerical precision, and many works exploit this by using some of the techniques described above. However, other ML models show a similar resilience and may benefit from acceleration in bespoke hardware. In addition to ML, multimedia applications, whose outputs are consumed by humans with inherently low sensitivity to noise, are also frequently approximated. Regardless of the application, AxC allows for achieving great improvements in system power consumption.

In addition to surveying existing works on these topics, our review also highlights several directions for future research. Naturally, some involve extending the knowledge and application of existing techniques like the above to improve their impact. Others should explore how to combine techniques across layers, i.e., using circuit-level techniques concurrently with architecture- and software-level techniques, and how to manage these approximations at run-time as easily as possible.

We observe large amounts of work existing in the domains of AxC and Edge computing but only very few that consider them together. Similarly, works rarely use more than one or two AxC techniques. These points lead us to believe that there is room for further improvements in these fields; some of which are needed to advance them toward broad adoption.

The interested reader can access the full paper at: https://dl.acm.org/doi/10.1145/3572772

Acronyms:

- AxC: Approximate Computing

- IoT: Internet of Things

- ML: Machine Learning

- NN: Neural Network